This is essay #5 in the Symposium on Is the Orchestrator Dead or Alive? You can read more posts from Hubert on his Substack, Hubert’s Substack

In a previous post, I talked about how data lineage can be difficult for stream processing workflows. The asynchronous tasks in streaming make it hard to conceptualize existing data lineage solutions. It’s because streaming tasks don’t have a start or an end. Lineage tools are mainly built for batch processing which expects synchronous tasks which do have a defined start and end. This same issue exists for workflow orchestrators.

Workflows are everywhere. If you’ve ever viewed a Spark DAG or a Flink plan, you’ll know that those are workflows. If you’ve ever explained a SQL statement in a popular database, you’ll also see a workflow. When implementing a SAGA pattern for your microservices, that is also a workflow. These workflows contain business logic which is always being updated for optimization to save cost and improve user experience. Workflows need to be agile. We need tools that enable this agility to quickly react to improved changes to the business.

Event-driven applications and (a)synchronous tasks

To better understand asynchronous applications, we’ll need to understand Event Driven Architecture (EDA). EDA is an acronym that categorizes solutions that are triggered by events. An example of an event is a file appearing in a directory or a message appearing in a queue. EDAs are always running and listening for an event to occur. Then they act on that event by either running a task or processing data.

EDA applications do not require the producer of the event to know about the consumer of the event. Producer and consumer are not communicating synchronously like request-response RESTful APIs. Therefore EDA applications are asynchronous.

Event-driven orchestration vs stream processing

Orchestration involves using a tool like Airflow or Dagster to execute tasks in a workflow. These tools make it easy to build complex workflows, schedule, and monitor them. Workflows in these tools are called DAGs (directed acyclic graphs) and are executed on a schedule. Event-driven orchestration follows EDA semantics and can be done with these same tools. Instead of running on a schedule, they are triggered based on an event.

Stream processing is also an EDA. Stream processing involves listening for events that have occurred in a data store or messaging system and processing those events while the data is in motion.

The main difference is that the events in an event-driven orchestration don't hold business data while the events in a stream processor do hold business data. Examples of business data would be account records, user records, product records, etc.

Can orchestrators do stream processing?

Both Airflow and Dagster have the ability to subscribe to an event to trigger a workflow. In Airflow and Dagster they are called sensors. These components allow the orchestrator to listen for events wherever they may occur. Some examples of this would be listening for a file to appear, waiting for an HTTP request, or even consuming from a Kafka topic.

Stream processing can also read from Kafka. So can event-driven orchestration support stream processing? In order to answer this question, we need to understand the differences between batch processing and stream processing.

Older ETL/ELT processes required writing their data into a database because they didn’t have a way to perform complex transformations like joins and aggregations. When you persist your data in a database, you're forced into batch processing semantics which is why most older ETL/ELT processes needed to use scheduling tools like CRON. Airflow and Dagster are similar to CRON with additional enhancements.

With this understanding of orchestrators, I’ll try to use another event-driven orchestrator called Prefect.

Prefect

Prefect is an open-source orchestration platform that looks promising. It natively has a way to consume events. In the code below, line 42 is an annotation that decorates a function. When this Python script is run, it will be submitted to a running Prefect server for execution. Line 9 contains the configuration to connect to Kafka. Line 21 contains the code that consumes data from Kafka and then processes the data by just printing it out to the console.

At first glance, this code looks like it may be the solution we need, but can it replace the popular stream processing platforms available today? Let’s see what we actually need for stream processing.

Stream processing requirements

Stream processing is an alternative way of transforming data that keeps your data in motion. This maintains real-time semantics but there are a few requirements orchestrators need to be able to handle stream processing.

Need to be able to hold state.

Need to distribute tasks to execute in parallel.

Need to shuffle data between tasks.

State enables stream processors to perform aggregations like counting records. Otherwise, it will not be able to remember the current count. These orchestrators do not have this ability.

Streams also need to evenly distribute their tasks across multiple workers so that they can process large amounts of data in parallel. Processing TBs of data through a machine will take a very long time. In a high throughput use case, the Kafka topic may have many partitions to allow for horizontal scaling. These orchestrators don’t take advantage of these partitions. They don’t have a way to distribute the data across multiple workers to execute parallel tasks. They deploy only one task runner which will need to subscribe to all partitions and process them synchronously.

Shuffling data is the way distributed databases move around data when performing a join. Shuffling and state enable stream processors their ability to perform complex transformations. Since orchestrators are neither distributed nor hold state, they cannot perform complex transformations.

So no, orchestrators cannot replace today’s popular stream processors. So what is event-driven orchestration supposed to do? The answer is really notifications. Event-driven orchestrations are really notification consumers. They only trigger tasks and do not define the task itself. The tasks that orchestrators invoke could be a batch process that can handle large volumes of data but that data wouldn’t come from Kafka. Batch data comes from data stores that hold data at rest.

Drivers are like DAGs

In Spark and Flink, you define your workflow logic in a driver just as you would in an Airflow DAG. Drivers are written in either Java, Scala, or Python and follow a functional programming (FP) paradigm. FP is beyond the scope of this post but to summarize, FP treats functions as first-class citizens. You can serialize a function passing them to other workers in a distributed system to run in parallel. These drivers define the logic in the workflow without actually running the logic locally. Instead, the functions get executed remotely to distribute the workload and scale out horizontally.

These drivers aren’t orchestrators like you would think of Airflow, Dagster, and Prefect. The main difference is DAG orchestrators define task workflows but do not implement the logic in the task. Drivers do implement the workflow logic.

Streaming workflow builders

There are nice streaming workflow builders in the market today but they are not orchestrators. Confluent’s stream designer and StreamSets are examples. These builders can build a streaming data pipeline from source to sink.

These builders have the ability to put tasks together like puzzle pieces using schemas. Schemas define the shape of the data. Stream builders use schemas to put tasks together with the same schema. If the schemas don’t match between the tasks, then the builder cannot put them together.

Is Closing The Gap Possible?

I really liked using Prefect as a way to trigger a workflow. It has a beautiful dashboard for monitoring your event-driven workflows. Plus, it’s open source which is even better. I’d say it’s a “prefect” orchestration tool. But it’s event-driven, not stream processing. If you need to do real-time processing of data, today you’ll need to leverage the stream processing tools available today like Apache Flink, Apache Spark, ksqlDB, etc.

But can orchestrators do stream processing?

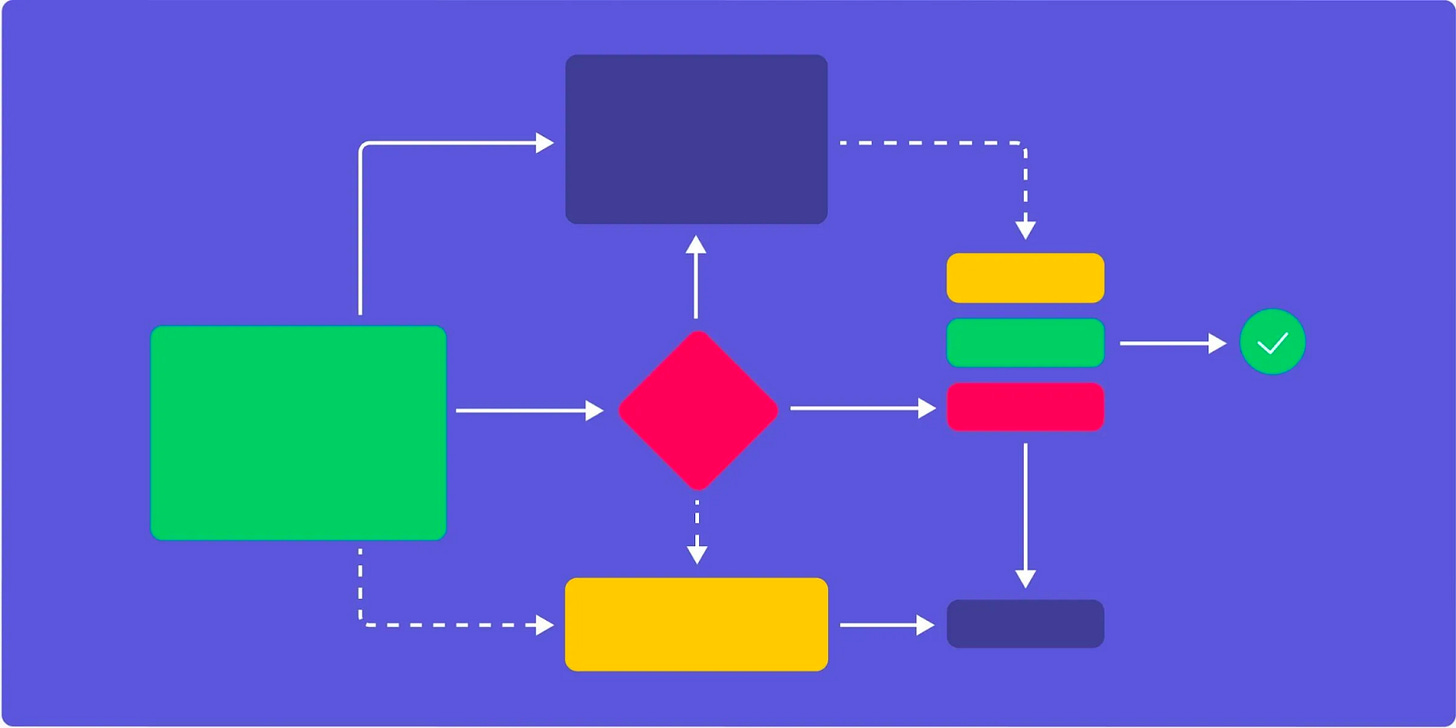

Not really. Orchestrators assemble discrete tasks in a workflow. To the orchestrator, tasks can be arranged in any order like square blocks. Tasks in stream processing are arranged like puzzle pieces and must follow the input and output of each subsequent task. Think of stream processors as a large SQL statement with many CTEs that feed into one another sequentially. Then at the end of the statement, there is one data set that is stored somewhere. That is simply not what workflow orchestrators do.

Great write-up. We also recently published an article on how to bridge Backend and Data Engineering teams using Event Driven Architecture - https://packagemain.tech/p/bridging-backend-and-data-engineering

You missed the original streaming workflow builder. Apache NiFi. https://nifi.apache.org/